Disclaimer: I am musing on the challenges I faced while trying to secure reliable internet I required during a recent set of business trips, and the process of developing various solutions to these challenges. These challenges are, in a general fashion, typical of the routine logistical challenges I face when in-the-field, and no doubt of other field technicians. In no way am I trying to reflect negatively on my employer, who for the purposes of this entry shall remain nameless.

I was recently on a couple of business trips, depending on an iPad as a critical part of the execution of the contract. This trip was to a small city of 25,656 (according to Wikipedia), big enough to have plenty of internet access points, cell phones, and cell phone data. As far as I was concerned, in fact, I was in a mini-mini version of Montreal, home for me, to those who haven’t figured it out yet.

The way the iPad is set up, wifi internet access is required to transfer building plans needed to do the work to the iPad, and transfer back files and data collected from my field work. I have made no bones mentioning to some key people heading the overall project that this is a potential Achilles’ Heel to the execution of the project, since, at least in the overall project’s fringe locations sufficiently beyond population centres, internet access would be a spotty luxury at best. My trips were at least symbolically close enough to the edges, underlining the potential problem.

One of the first challenges I found was that the iPad didn’t seem to play well with the internet supplied in the motel (DataValet); although I did manage to get it to work once, it proved a bit too frustrating to get working reliably. A colleague confirmed that he’d had similar problems getting Apple products to connect to DataValet. I had no trouble getting my personal computer running Fedora 21 Workstation to work with DataValet: In fact, besides not recalling having trouble over the years connecting to wifi that wasn’t specific to Linux or Fedora, I would actually say that the experience was even easier than in the past, since the daily leases seemed to automatically renew, although it seemed to insinuate itself by a “convenient” automatic popup window. In parallel, my work Windows-based machine also worked flawlessly throughout with DataValet, although if I remember correctly, I may have had to occasionally open up a browser in order to renew the leases.

Add to this challenge, my employer’s local office didn’t seem to have wifi, or at least, assuming that it *was* there as a hidden network, my work computer didn’t automatically connect to the corporate wifi when not plugged in to the corporate network, which it normally does at my home office.

My first solution was to fulfill a purpose of my having asked for a company smart phone in 2014: Create a hot spot using the data plan on my work phone to do data transfers when not in a wifi zone that works well for the iPad. However, it seemed, between the picture-heavy data and the fact that the iPad seems to do automatic background backups when hooked up to internet — a feature to which I initially had a (negative) knee-jerk reaction that nonetheless actually was useful at one point and since — my phone appeared to run out of my data plan for the month, as evidenced by the sudden stop of internet connection through the phone while still operating just fine as a phone. Having quickly checked the phone’s data usage logs and determining that I’d certainly gotten to the neighbourhood of the limit I believed I had (2 gigs), I assumed that the phone’s contract had a limit set by my employer to turn off the data plans until the month rolls over in order to avoid overage charges. I later learned, upon my return home and standing in front of the IT tech responsible for the corporate cell phones, that the problem was presumed to be an unusual set of settings probably set by some esoteric app (of which I have have very few, esoteric or otherwise, on my work phone), or possibly a SIM card problem, which turned on off the phone’s data capabilities, and that in any case the company has no such policy to ask the cell service provider to turn off a phone’s data access when it reaches the limit of “included data” in the plan, until the rollover date. The lack of internet on the phone is “solved” by resetting the phone to factory settings; I should get instructions on how to do it in the future should I be faced with the problem again. 🙂

This led to a second solution: I used my personal phone to create a hotspot and consumed a bit of my personal data plan, which didn’t bother me too much, at least until it were to involve overage charges. Not that I checked, but based on the little amount of time I used it, I’m sure I never got into that area.

The next solution also created another challenge due to a flub on my part: My client finally gave wifi access to the iPad at her various locations; however, I should have requested that she also enable my work computer, since I had a secondary need for internet given that I developed a need to produce or modify extra plans several times once arriving at some sites, and as such a need to transfer the plans off the computer and onto the iPad.

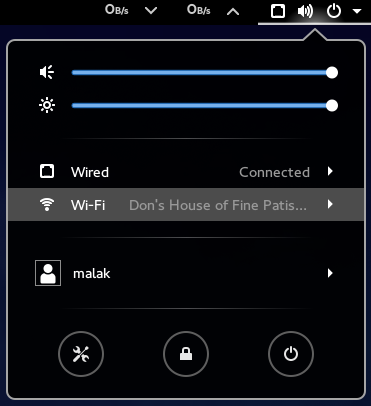

Finally, I realized that when I have wired access, I had yet another solution available to me: I could set up my linux laptop to create a wifi hotspot. This was rather easy, at least under the current gnome version in Fedora 21 and I believe has been for quite a while under the gnome 3.0 series, and probably before too. Unfortunately, this was wasn’t a solution at the motel since it only had wifi and no wired access, and I didn’t have an external wifi receiver with a cord to provide the wired internet and free up the wifi card.

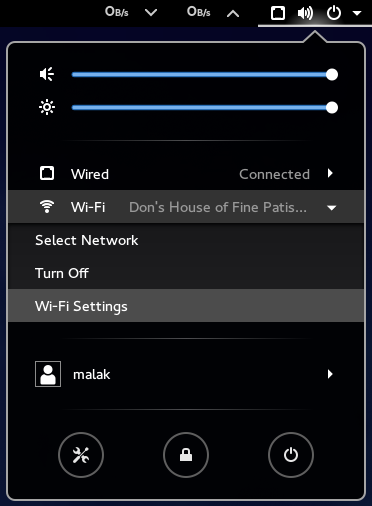

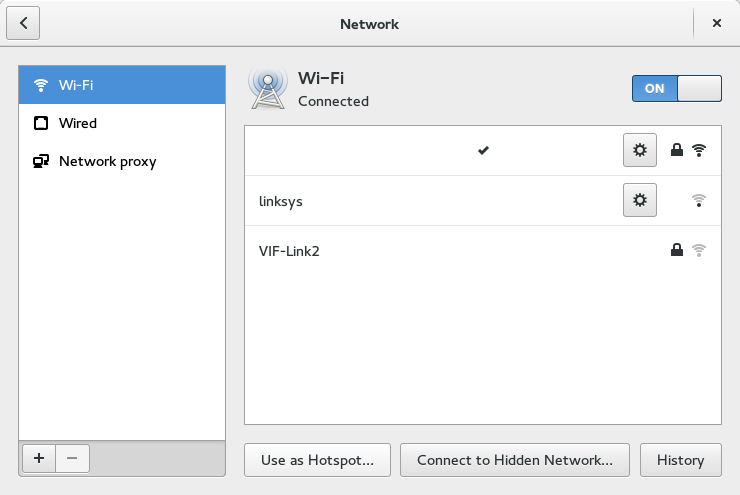

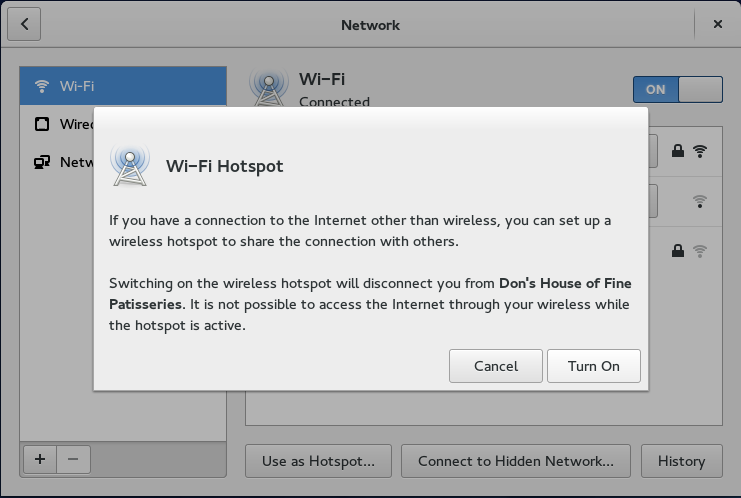

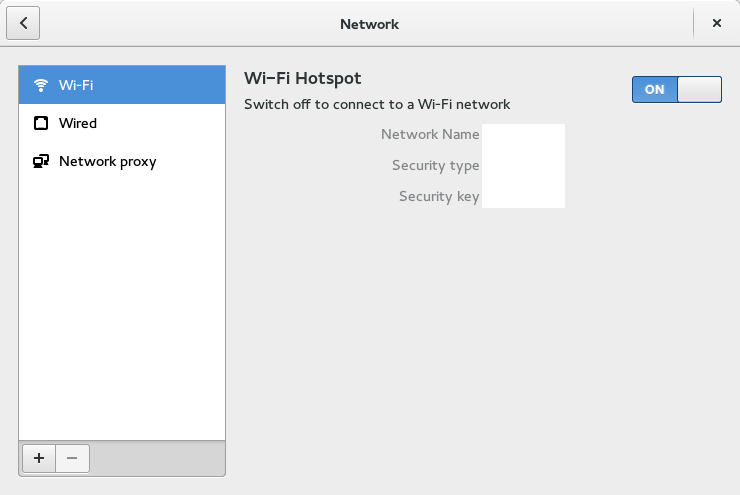

Here are some screenshots of how easy it is to setup a wifi hotspot under Gnome 3:

Feeling a bit curious along the lines of “shouldn’t this be relatively easy under Windows, too?”, I checked on my work computer, and while it seemed possible, and indeed my brother once did it for me with his Windows computer, it was not obvious at all; in fact, I gave up after about four or five click-throughs with little end in sight.

Hence, at the local office and having set up my laptop to create a local wifi hotspot, I’d created a mildly-amusing-to-me setup on my temporary desk, plugging in my personal laptop to the corporate network, running a hotspot using its wifi card, and using my work computer normally over wifi as well as doing data transfers from the iPad.

Back at home and at my home office, I mention my difficulties in getting internet access to my supervisor (who isn’t a computer techie type), who thought that creating a hotspot under Windows couldn’t be done, or at least he didn’t realize it could be.

Further discussing this with him, I explained the situation saying “I don’t mind trying to find other solutions — that *is* my job — but after not having two A Plans (the motel internet not working for the iPad, nor having wifi at the office), then suddenly not having a plan B (the company cell phone internet not working), having to depend on my personal phone’s data plan, then having to depend on the client’s internet access but not having enough access for all devices, and finally coming up with a part-time solution to replace one of the A-plans — using a second of my personal resources in the form of my personal laptop — there’s a problem here,” to which he agreed.

Jovially, he did however suggest that “in the next leg of your travels, I happen to know that if you can go to the local library, they have free wifi”. This made me realize that if necessary and if possible, I could also try the free wifi at the local Tim Hortons (a popular Canadian chain of coffee and doughnut shops), assuming that there is one in the remote town where I’ll be visiting next.

Which has me really thinking about the problem:

– not all the field techs in the company have smart phones with data plans, and as such not able to create a needed hotspot in order to enable the execution of a project;

– not all the field techs have personal smart phones with a data plan, nor should field techs in general be required to use their personal data plans, let alone go into overage charges, in order to enable the execution of a project;

– at least at first glance, it doesn’t seem to be a quick and easy thing to turn a windows machine into a hotspot in order to enable such work — and I don’t want to hear from the peanut gallery on this one, since I *know* that it *can* be done; my point is that at first glance, even a moderately savvy user such as myself shouldn’t have to say “It’s easy under Gnome 3, why isn’t it about as easy under Windows? Boy it’s a good thing that I had my personal laptop with me!” (On a side note, usually the stereotype is that “Windows is easy, and Mac easier, but isn’t Linux hard?” 🙂 )

– and, only a limited number of computer users are using Gnome 3, where it is easy to set up a hotspot if you either have a wired connection to the internet, or two wifi cards on your computer. (I’ll have to check with my brother, who uses XFCE on one of his laptops, which is on a technical level identical to mine, to see how easy it is under that desktop; obviously, it’s technically possible; I imagine it’s just a question of how easily different desktops enable the functionality.)

Which leads me back to the above-mentioned problem of “what do you do in remote, small villages where you don’t have a corporate office with wifi, motel / B&B internet access is spotty at best, there’s no cell phone coverage, and there are few if any public wifi spots like a restaurant or a public library?”

I just hope that the library’s free wifi isn’t provided by DataValet. 🙂